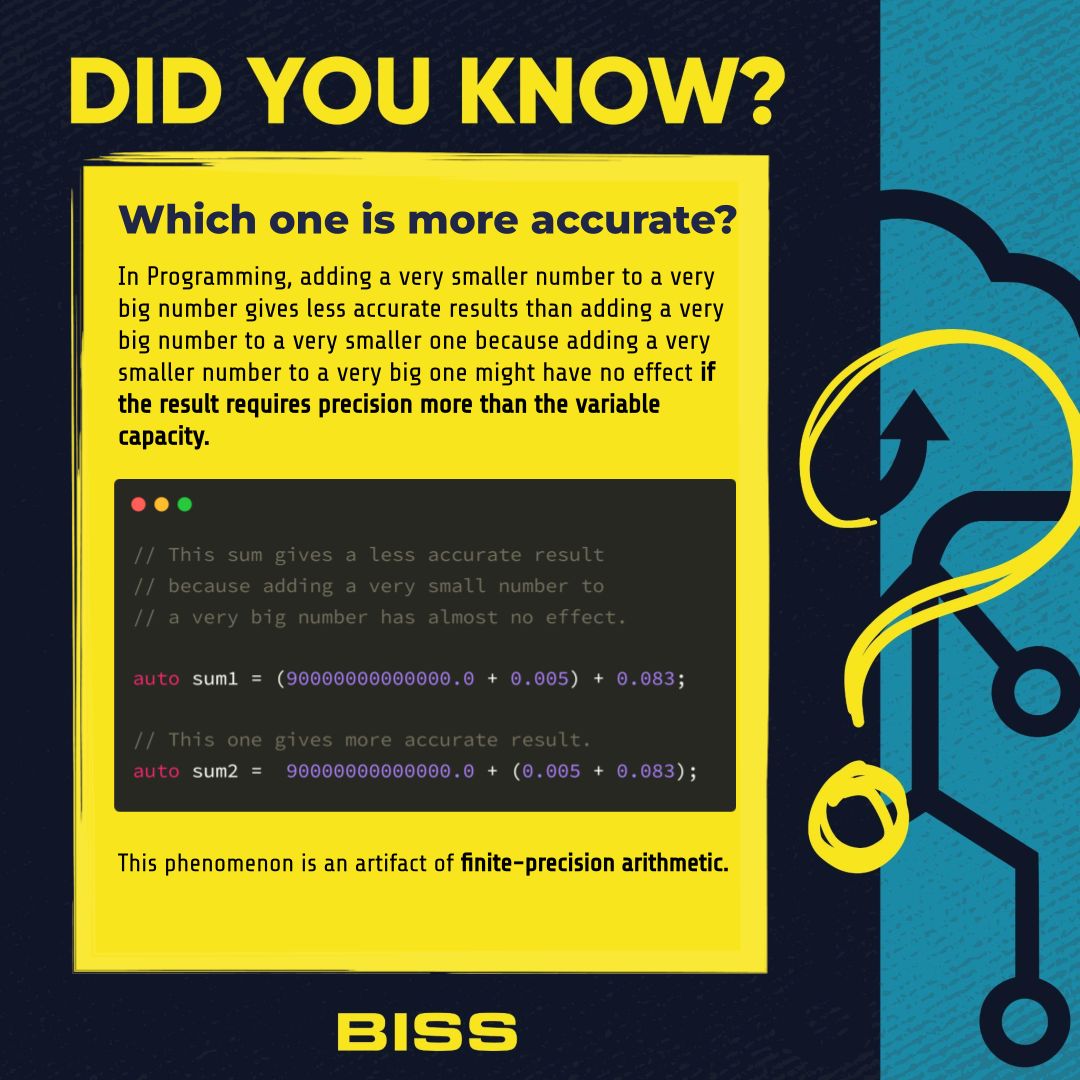

In Programming, adding a very smaller number to a very big number gives less accurate results than adding a very big number to a very smaller one because adding a very smaller number to a very big one might have no effect if the result requires precision more than the variable capacity.

Example

// This sum gives a less accurate result because adding a very

// small number to a very big number has almost no effect.

auto sum1 = (90000000000000.0 + 0.005) + 0.083;

// This one gives more accurate result.

auto sum2 = 90000000000000.0 + (0.005 + 0.083); This phenomenon is an artifact of finite-precision arithmetic.